Inferring gender from images

On this page, you can find information on gender inference methods that rely on images-only. We describe their design and underlying processes as well as their limitations.

To see how image-based algorithms perform in comparison with other methods, please visit our performance page.

To see how it performs in comparison with other methods, please visit performance page.

Deepface

Deepface is a Python framework wrapping several state-of-the-art models for facial recognition and facial attribute analysis (age, gender, emotion, and race).

How it works

By default, Deepface uses the VGG_Face model for facial recognition and attribute analysis. This model is based on a convolutional neural network (CNN) trained on a dataset including 2.6 million images of over 2.6 thousand celebrities. Precisely, the dataset consists of attribute information for the celebrities drawn from the Freebase knowledge graph and corresponding images of their faces from both the Internet Movie Data Base (IMDB) and Google and Bing Image Search. Given an image of a person, VGG_Face infers their gender and age, race, and emotion. For information on the other models included in Deepface, please consult the project description on PyPi. If you are interested, in this article you can learn more about how CNNs work.

Installation

The easiest way to install deepface is to download it from PyPI (for example, using pip).

How to use

While Deepface covers multiple face recognition tasks, we are only interested in inferring a person's gender in this overview of methods. This is handled by the analyze function. It takes an image path or a list of image paths as input and outputs the demographic attributes specified with the actions parameter. Here is a code example for inferring a person's gender:

from deepface import DeepFace

demography = DeepFace.analyze("img4.jpg", actions = ['gender'])

print("Gender: ", demography["gender"])

Deepface also offers an API, which you can embed into your applications. Please refer to the information on the Github page or this demo video for more details on using the API.

You can try out this method with executable python notebooks powered by Binder. Click the button below to launch it and follow the instructions:

Binder is a service that deploys computing environments on the cloud.

Keep in mind that these notebooks are made for demonstration of the method and are not designed for large datasets.

Important: If you would like to run the model on large scale data, you should install Deepface locally and specify the models attribute of the analyze function as follows:

models = {}

models["gender"] = Gender.loadModel()

DeepFace.analyze(img_path, actions=["gender"], models=models, enforce_detection=True)

Limitations

There are some limitations in the training model i.e.:

- Deepface is able to predict gender only if an image contains one recognizable face. If your data contains images with (multiple faces or images without any present people, the model might not be able to provide any gender estimates).

- Predictions for females are usually worse than for males.

- Furthermore, predictions for images of people with darker skin tones and people with facial accessories also perform worse.

M3

How to use

M3 is a Python implementation of the M3 model (Multimodal, Multilingual, and Multi-attribute). M3 is primarily a mixed-method that performs best when provided with both textual and visual input. However, you can also use it to predict gender from images only. For details regarding installation and how it works, please visit the mixed-methods section of our methods overview.

Commercial tools

Due to their constant changes, the tools presented here were not part of our evaluation presented in the Performance section.

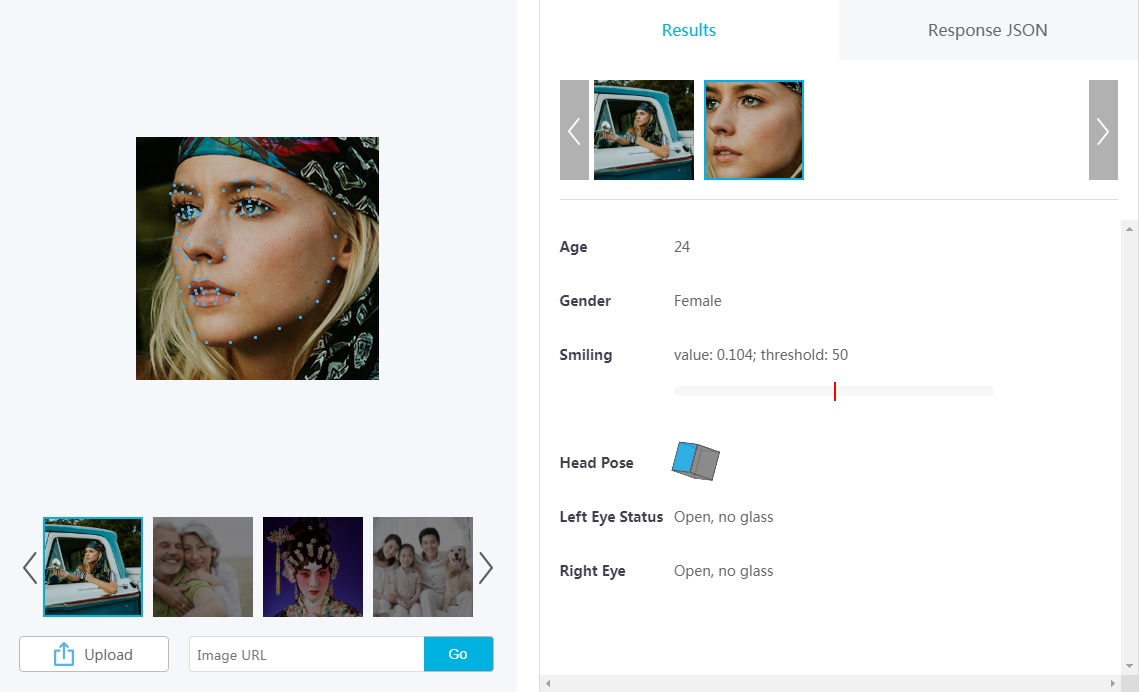

On this website, we present information on the two most popular commercial services for gender inference on images - Face++ and Azure Face API

Face++ uses the Detect API to detect human faces on the provided images. For each face detected in the image, the API returns a unique ID, a rectangle area for the face location in the image, face attributes (e.g., gender), and an array of the key landmarks (e.g., the point corresponding to the left eye). The free version allows you to get back inferences for the top five largest detected faces. If your images contain more than five faces, you can use the Face Analyze API to get face attributes, such as gender, based on the IDs returned by the Detect API.

Similarly, the Azure Face API detects human faces in an image and returns the rectangle coordinates of their locations. Optionally, face detection can extract a series of face-related attributes, such as gender. To use these tools locally, you must set up a user account and request an API key.

Limitations

- In contrast to open-source methods, commercial tools restrict the amount of data you can process and the number of API keys you can register per user. To send an unlimited number of queries per second, you must subscribe to a paid membership.

- The coverage (i.e., the share of images for which you get a score back) can be at around 80% only.

- Due to their constant changes, the tools were not part of our own evaluation. Buolamwini and Gebru, however, have evaluated the tools in this paper